Event-Driven Visual-Tactile Sensing

and Learning for Robots

Many everyday tasks require multiple sensory modalities to perform successfully. For example, consider fetching a carton of soymilk from the fridge; humans use vision to locate the carton and can infer from a simple grasp how much liquid the carton contains. This inference is performed robustly using a power-efficient neural substrate — compared to current artificial systems, human brains require far less energy.

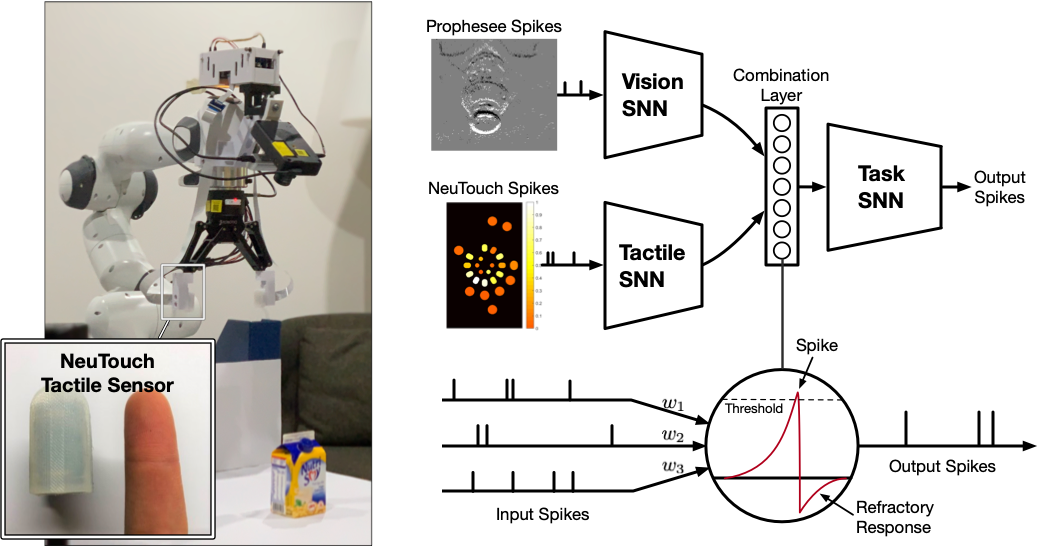

In this work, we gain inspiration from biological systems, which are asynchronous and event-driven. We contribute an event-driven visual-tactile perception system, comprising NeuTouch — a biologically-inspired tactile sensor — and the VT-SNN for multi-modal spike-based perception.

We evaluate our visual-tactile system (using the NeuTouch and Prophesee event camera) on two robot tasks: container classification and rotational slip detection. We show that relatively small differences in weight (approx. 30g across 20 object-weight classes) can be distinguished by our prototype sensors and spiking models. The second experiment indicates rotational slip can be accurately detected within 0.08s. When tested on the Intel Loihi, the SNN achieved inference speeds similar to a GPU, but required an order-of-magnitude less power.

Our Paper

Event-Driven Visual-Tactile Sensing and Learning for Robots

Tasbolat Taunyazov, Weicong Sng, Hian Hian See, Brian Lim, Jethro Kuan, Abdul Fatir Ansari, Benjamin Tee, and Harold Soh

Robotics: Science and Systems Conference (RSS), 2020. PDF.

@inproceedings{taunyazov20event,

title={Event-Driven Visual-Tactile Sensing and Learning for Robots},

author={Tasbolat Taunyazoz and Weicong Sng and Hian Hian See and Brian Lim and Jethro Kuan and Abdul Fatir Ansari and Benjamin Tee and Harold Soh},

year={2020},

booktitle = {Proceedings of Robotics: Science and Systems},

year = {2020},

month = {July}}

Team

Tasbolat Taunyazov1

Weicong Sng1

Hian Hian See2

Brian Lim2,3

Jethro Kuan1

Abdul Fatir Ansari1

Benjamin C.K. Tee2,3

Harold Soh1

1Dept. of Computer Science, National University of Singapore 2Dept. of Materials Science and Engineering, National University of Singapore 3Institute for Health Technology and Innovation, National University of Singapore

Videos

Our 5-minute Technical Talk at RSS 2020:

Contact

We’re to happy to hear from you! Please see our FAQ page first, and if you have futher questions or comments, please contact Tasbolat Taunyazov or Harold Soh

Acknowledgements

This work was supported by the SERC, A*STAR, Singapore, through the National Robotics Program under Grant No. 172 25 00063, a NUS Startup Grant 2017-01, and the Singapore National Research Foundation (NRF) Fellowship NRFF2017-08. Thank you to Intel for access to the Neuromorphic Research Cloud.