Observed Adversaries in Deep Reinforcement Learning, Eugene Lim★ and Harold Soh★, AAAI Fall Symposium Series, Artificial Intelligence for Human-Robot Interaction

Links:

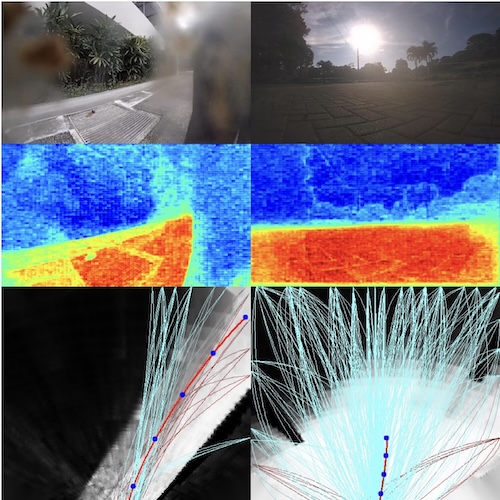

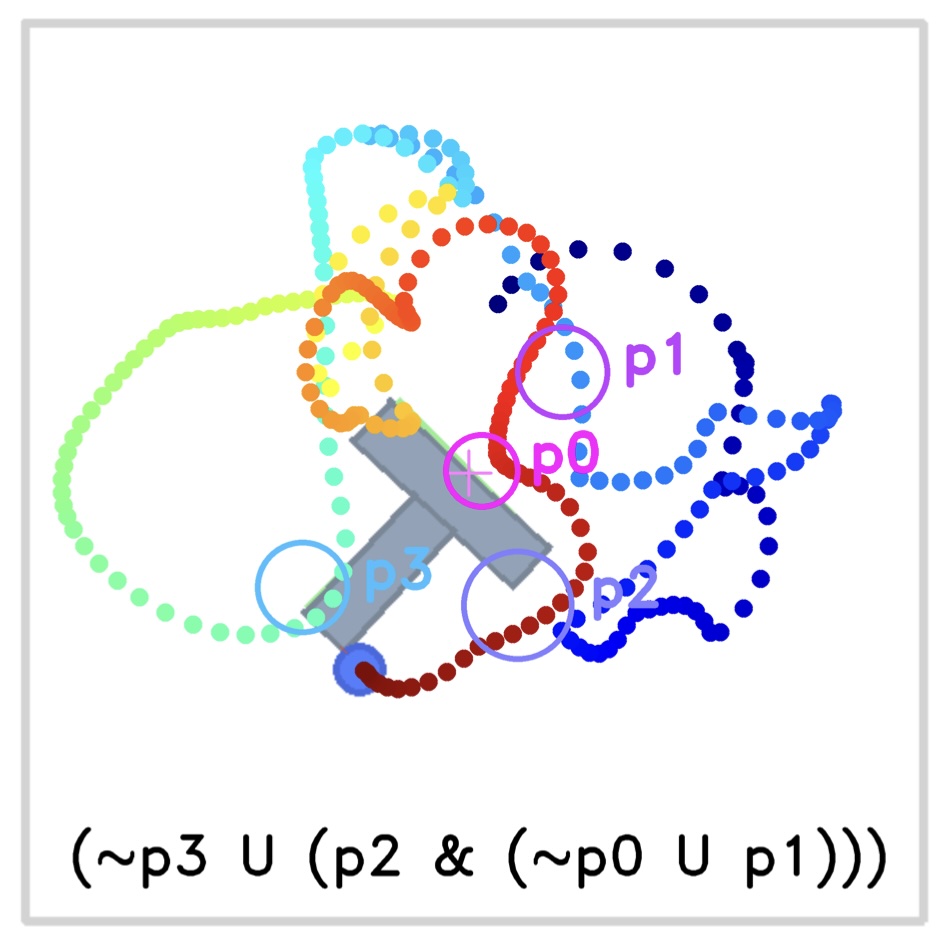

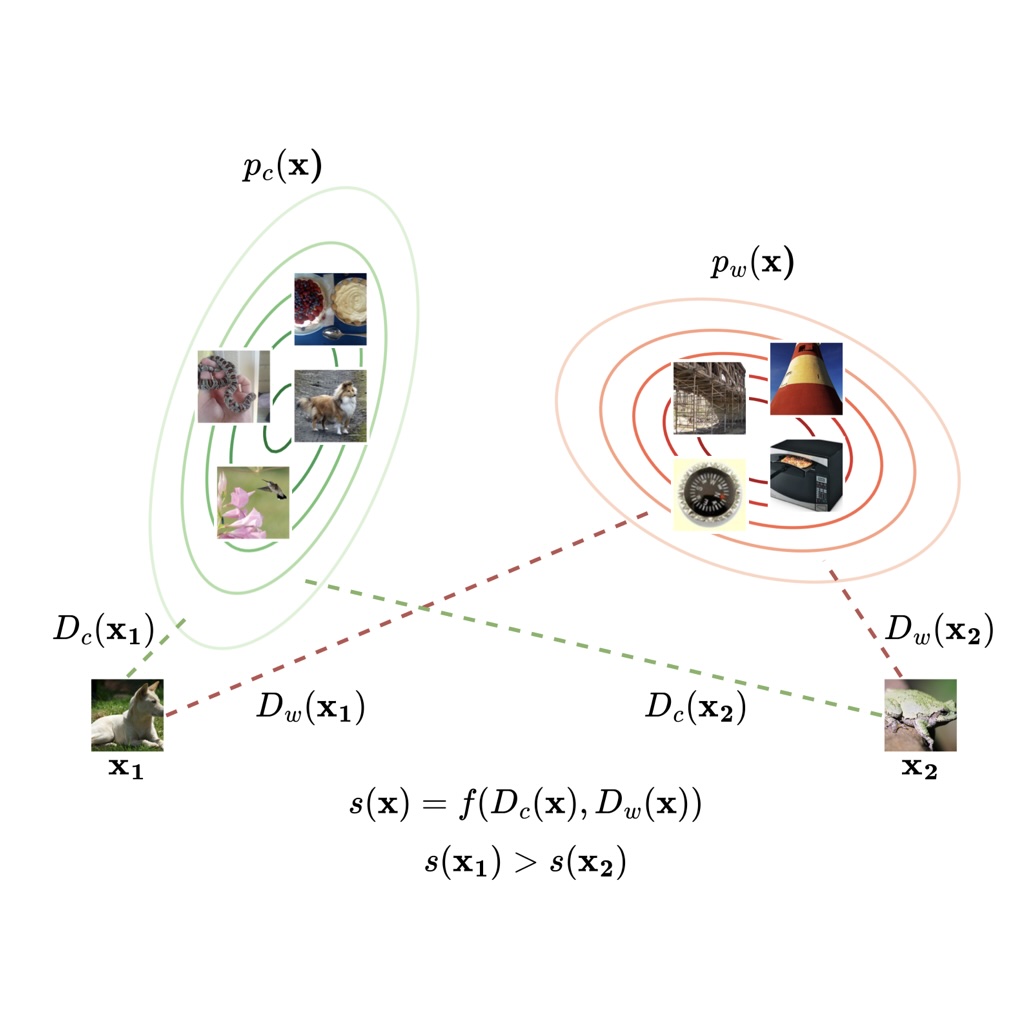

In this work, we point out the problem of observed adversaries for deep policies. Specifically, recent work has shown that deep reinforcement learning is susceptible to adversarial attacks where an observed adversary acts under environmental constraints to invoke natural but adversarial observations. This setting is particularly relevant for HRI since HRI-related robots are expected to perform their tasks around and with other agents. In this work, we demonstrate that this effect persists even with low-dimensional observations. We further show that these adversarial attacks transfer across victims, which potentially allows malicious attackers to train an adversary without access to the target victim.

Resources

You can find our paper here.

Citation

Please consider citing our paper if you build upon our results and ideas.

Eugene Lim★ and Harold Soh★, “Observed Adversaries in Deep Reinforcement Learning”, AAAI Fall Symposium Series, Artificial Intelligence for Human-Robot Interaction

@inproceedings{lim2022observed,

title={Observed Adversaries in Deep Reinforcement Learning},

author={Lim, Eugene and Soh, Harold},

journal={AAAI Fall Symposium Series, Artificial Intelligence for Human-Robot Interaction},

year={2022} }

Contact

If you have questions or comments, please contact Eugene.