Large Language Models as Zero-Shot Human Models for Human-Robot Interaction

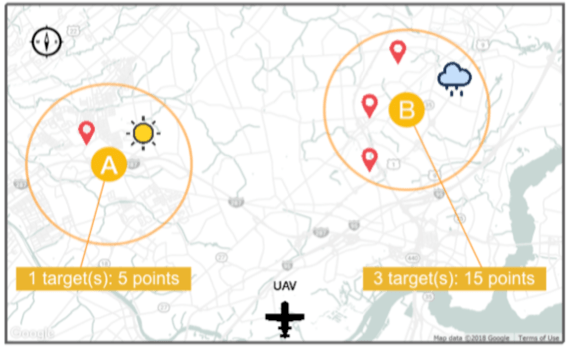

We explore the potential of LLMs to act as zero-shot human models for HRI. We contribute an empirical study and case studies on a simulated table-clearing task and a new robot utensil-passing experiment.