If you knew a robot could navigate around obstacles, would you trust it to follow a person across a room? Or maybe not?

TLDR; We discover human trust in robots varies across task contexts in a structured manner. Based on this observation, we model trust as a latent function that depends on a “perceptual task space” and develop state-of-the-art Bayesian, Neural, and Hybrid trust models. Our work enables robots to predict and reason about human trust across tasks and potentially other contexts.

Read on below for key highlights. You can find more details in our paper

The Importance of Human Trust in Robots

Would you trust a robot butler to bring you a cup of hot coffee? Or perhaps a self-driving car to bring you safely to work? Or a fully automated plane to fly you and your family for a holiday? Your trust in these machines and other robots affects whether you would place your well-being in their hands (or grippers).

As robots gain capabilities and are increasingly deployed, e.g., for patient rehabilitation and to provide frontline assistance during the COVID-19 pandemic, it is important to ensure humans have the right amount of trust in robots. Miscalibrated trust can be dangerous: undertrust in robots leads to poor usage, and overtrust in robots can cause people to rely on a robot (e.g., a self-driving car) in situations that it is unable to handle (e.g., bad weather).

Further Reading: For a general overview about trust in robots, see our review article.

How does Trust Change Across Tasks?

We are currently building new robots capable of performing different sorts of tasks. But there is very little work about if, when, and how human trust in robots “transfers” between tasks.

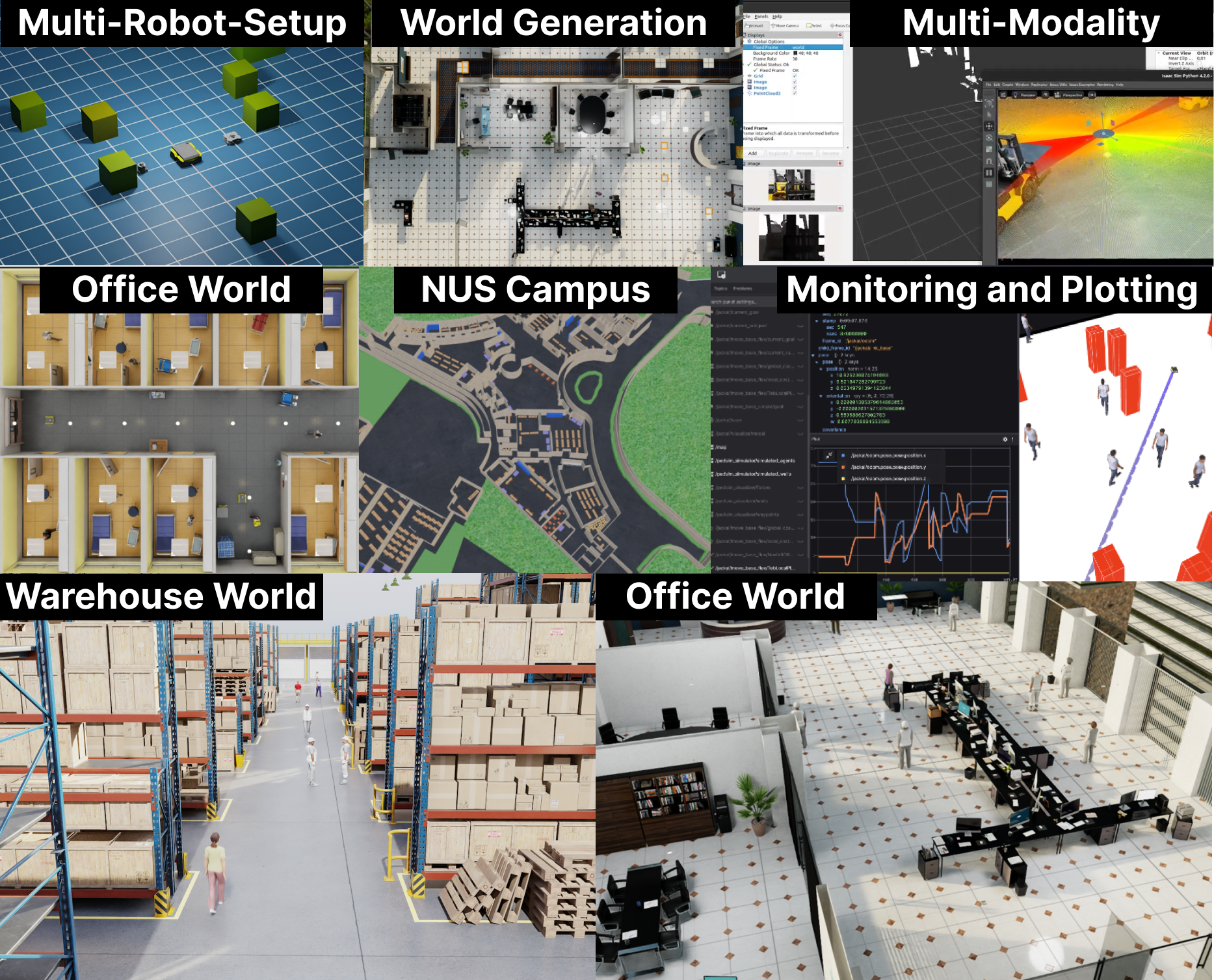

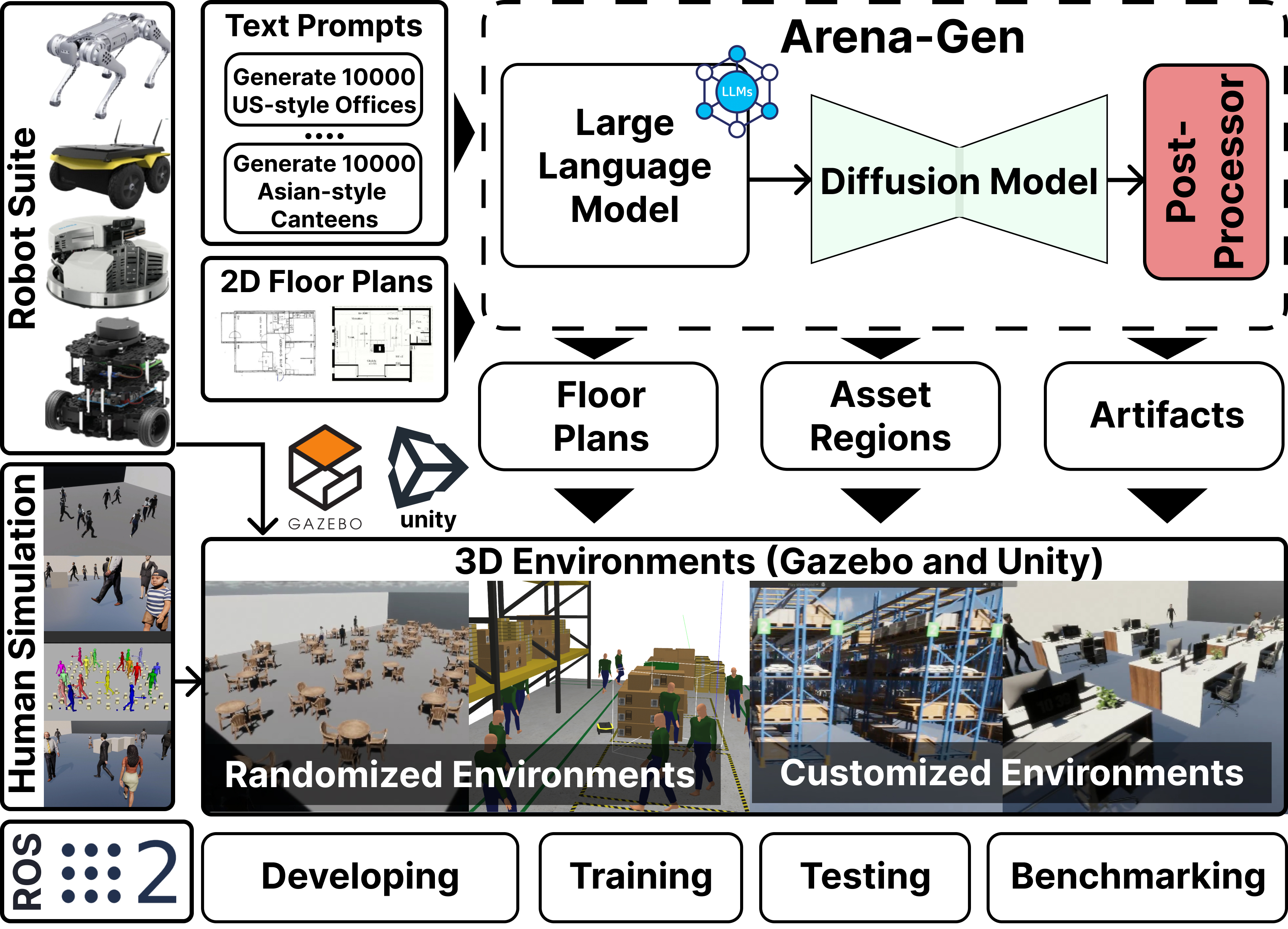

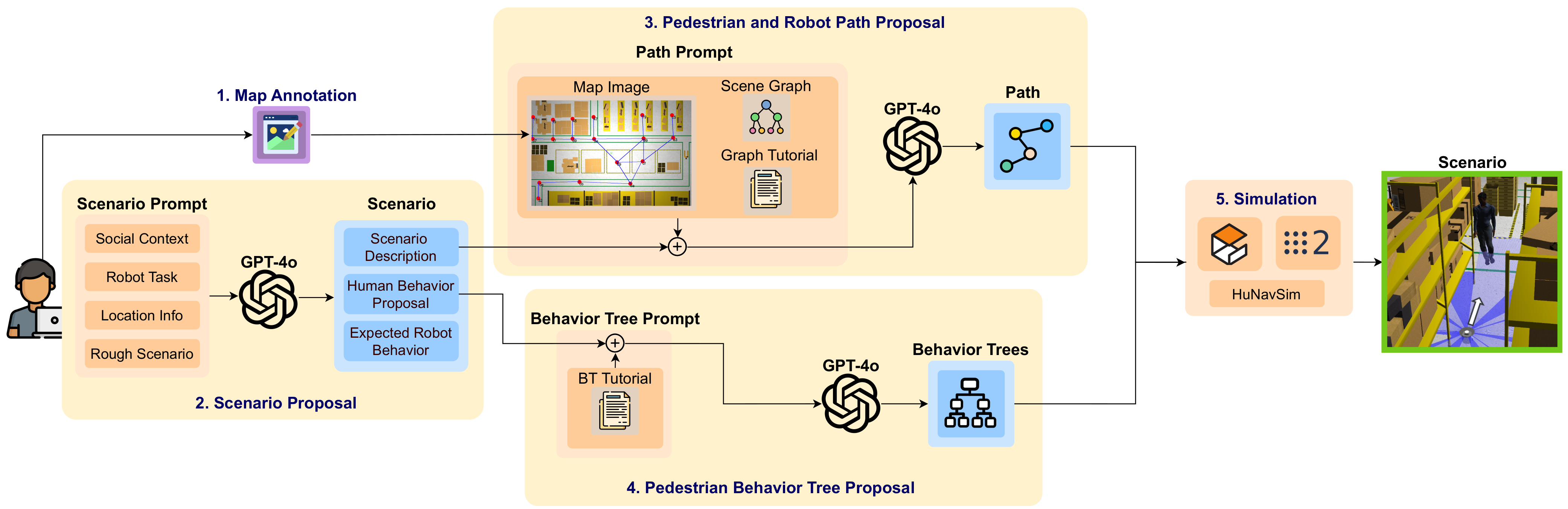

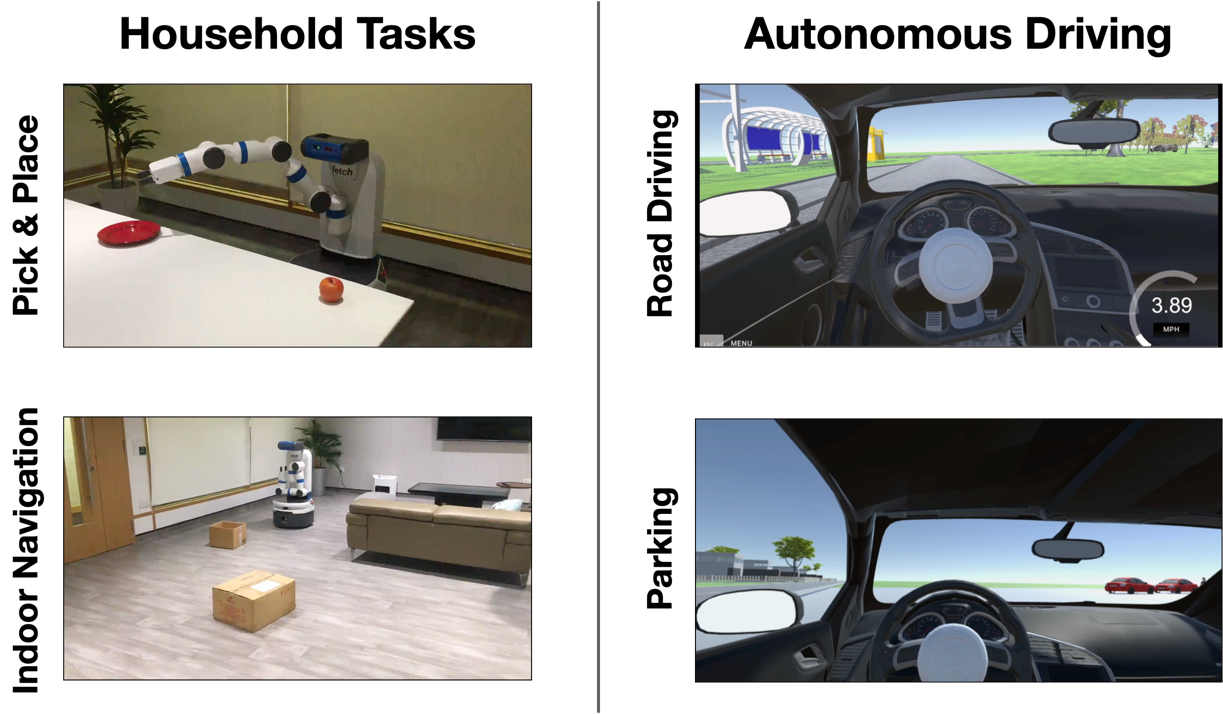

To study trust transfer, we performed an in-lab HRI experiment in two separate domains (Household and Autonomous Driving), each with 12 tasks. In the household domain, we used a Fetch robot which picked and placed different objects, and navigated around a room under different conditions. In the Autonomous Driving domain, we used a VR simulation of a self-driving car performing various road driving and parking maneuvers. The two domains are separate; there are no crossover tasks since the robots are different but using two domains helps ensure that whatever we discover about trust transfer isn’t just specific to one domain.

In brief, we had three major findings:

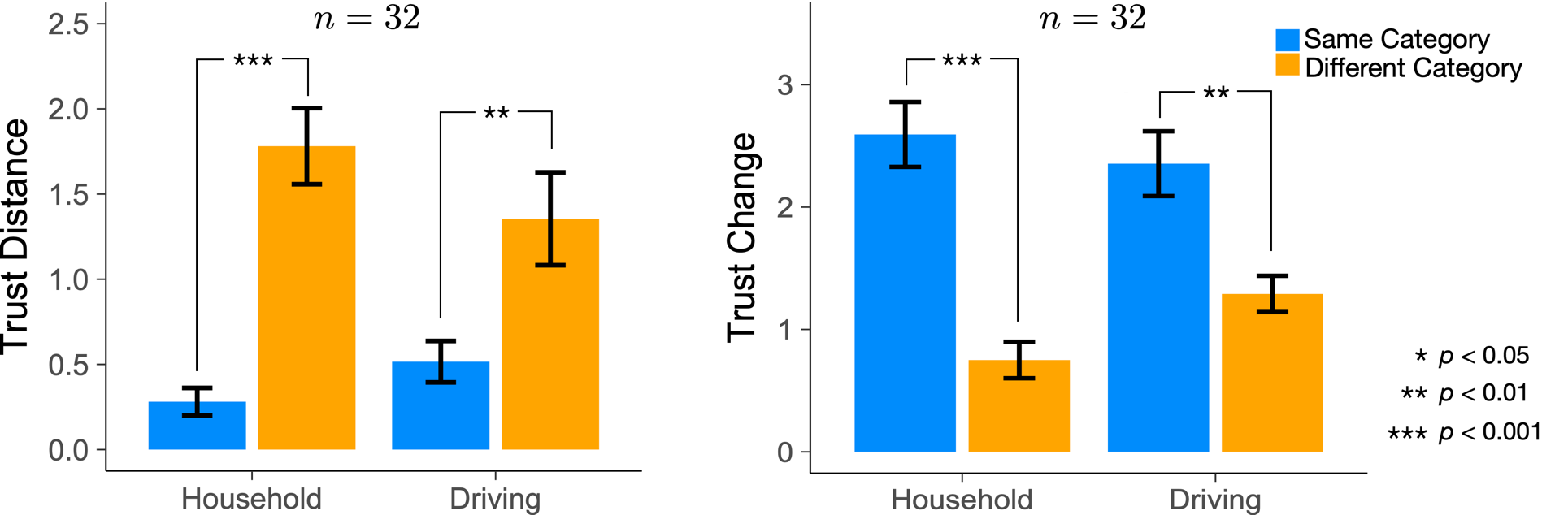

- Task Similarity matters: if we trust the robot to perform a task (e.g., picking up an apple), we’re more likely to trust the robot to perform something similar (picking up a cup) compared to a different task (navigating to the living room).

- Trust Change Transfers: Second, trust changes are “shared”. When we see a robot perform a task, it doesn’t just just change our trust in the robot’s ability to do that specific task, but also in similar unseen tasks.

- Task Difficulty matters: Finally, human trust transfer appears to be asymmetric: when you trust a robot to do a certain task, it’s more likely you’ll trust the robot to do something you perceive as easier, compared to something a lot more difficult.

These findings are important because

Modeling Human Trust as a Latent Function

From our study above, we see that (1) trust is task dependent and (2) there is an underlying structure to tasks that impacts how trust transfers and evolves. A natural question is how can we formalize these concepts in a computational model. Having such a model would enable robots to predict and reason about human trust.

How can we represent the structure or similarity between tasks? In this work, we used vector task spaces: each task is represented by a real vector in a perceptual space, \(\mathcal{Z}\). We learn a projection function \(f(z)\) that takes as input task features \(\mathbf{x}\) (e.g., a sentence description of the task or a video), and maps it to a point \(z\) our perceptual task space. The video below shows this process and where the 12 tasks in our household domain end up:

Given this task space, we model a person’s (say, Bob) trust, in a robot \(a\) at a given time \(t\), as a function \(\tau_t^a(z)\) over \(\mathcal{Z}\). We can then ask the model questions such as: “What is Bob’s trust in the robot’s ability to navigate while following a person?”. The model says 0.64, which means the Bob doesn’t quite trust the robot to do so.

Trust is dynamic quantity that changes given observations of robot performance. We model these changes via an update function \(g\). If Bob were to see the robot doing some fancy navigation, his trust changes and his assessment of the robot being able to follow a person has increased to about 90%. Similarly, Bob may currently trust the robot to pick and place a lemon. But after seeing the robot fail badly at picking up an apple, he no longer trusts the robot to help him make lemonade.

Trust Model Variants

In our paper, we describe three different models under the general scheme above. All three models make use of a learned task space:

- The Gaussian process (GP) model arises by viewing trust formation as a form of Bayesian function learning — human brains combine prior notions of robot capabilities with evidence of robot performance via Bayes Rule.

- The Neural model is a data-driven approach that measures task similarities using dot products. The key difference from the GP model is in the flexibility of the trust updates; the neural model is a purely based on data and uses Gated Recurrent Units to learn how to update trust.

- The Hybrid model combines both the GP and Neural models; it uses a Bayes update with a data-driven neural component.

We tested our three models using the data collected in our human-subject experiments and show they outperform existing state-of-the-art predictive trust models that do not consider task dependence. Among the three models, the Hybrid model performs the best. For more results, please see our paper.

Towards the Future

This paper takes a step towards conceptualizing and formalizing human trust in robots across multiple tasks. Formalizing trust as a function opens up several avenues for future research. Moving forward, we aim to fully exploit this characterization by incorporating other contexts. For example, does trust transfer when the environment changes substantially or a new, but similar robot appears?

We are also working on improving our experiments: we need better ways to elicit and measure trust in naturalistic settings. Our current experiments employ relatively short interactions with the robot and rely on subjective self-assessments. Future experiments could employ behavioral measures, such as operator take-overs and longer-term interactions where trust is likely to play a more significant role.

It is also essential to examine trust in the robot’s “intention”; we have recently examined how human mental models of both capabilities and intention influence decisions to trust robots and how to use them to enhance human-robot collaboration.

Citation

Consider citing our paper if you build upon our results and ideas.

Soh, Harold, et al. “Multi-task trust transfer for human–robot interaction.” The International Journal of Robotics Research 39.2-3 (2020): 233-249.

@article{soh2019multi,

title={Multi-task trust transfer for human--robot interaction},

author={Soh, Harold and Xie, Yaqi and Chen, Min and Hsu, David},

journal={The International Journal of Robotics Research},

volume = {39},

number = {2-3},

pages = {233-249},

year = {2020},

doi = {10.1177/0278364919866905},

publisher={SAGE Publications Sage UK: London, England}

}

Contact

If you have questions or comments, please contact Harold Soh.

Acknowledgements

This work was supported in part by a NUS Office of the Deputy President (Research and Technology) Startup Grant and in part by MoE AcRF Tier 2 grant MOE2016-T2-2-068.