Embedding Symbolic Temporal Knowledge into Deep Sequential Models, Yaqi Xie ★, Fan Zhou ★, and Harold Soh ★, IEEE International Conference on Robotics and Automation (ICRA)

Links:

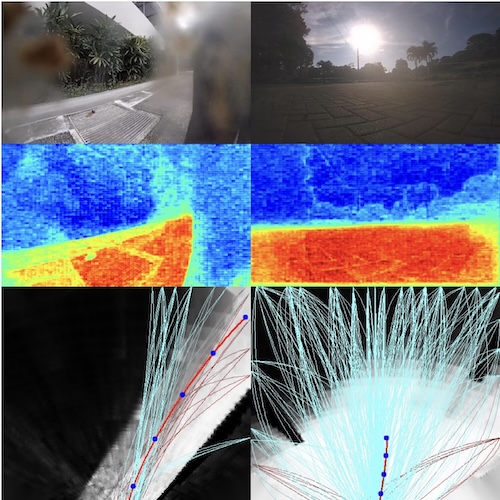

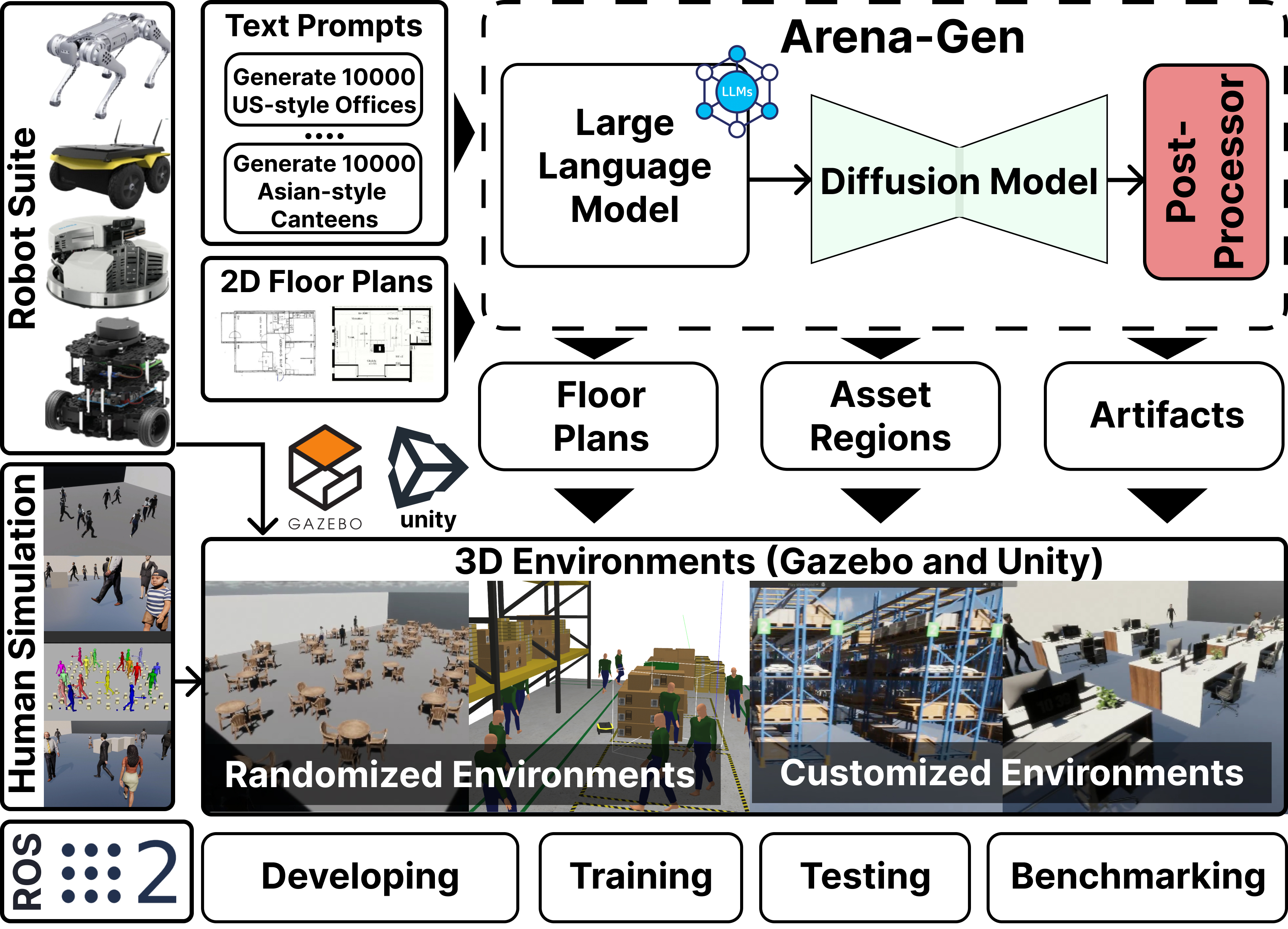

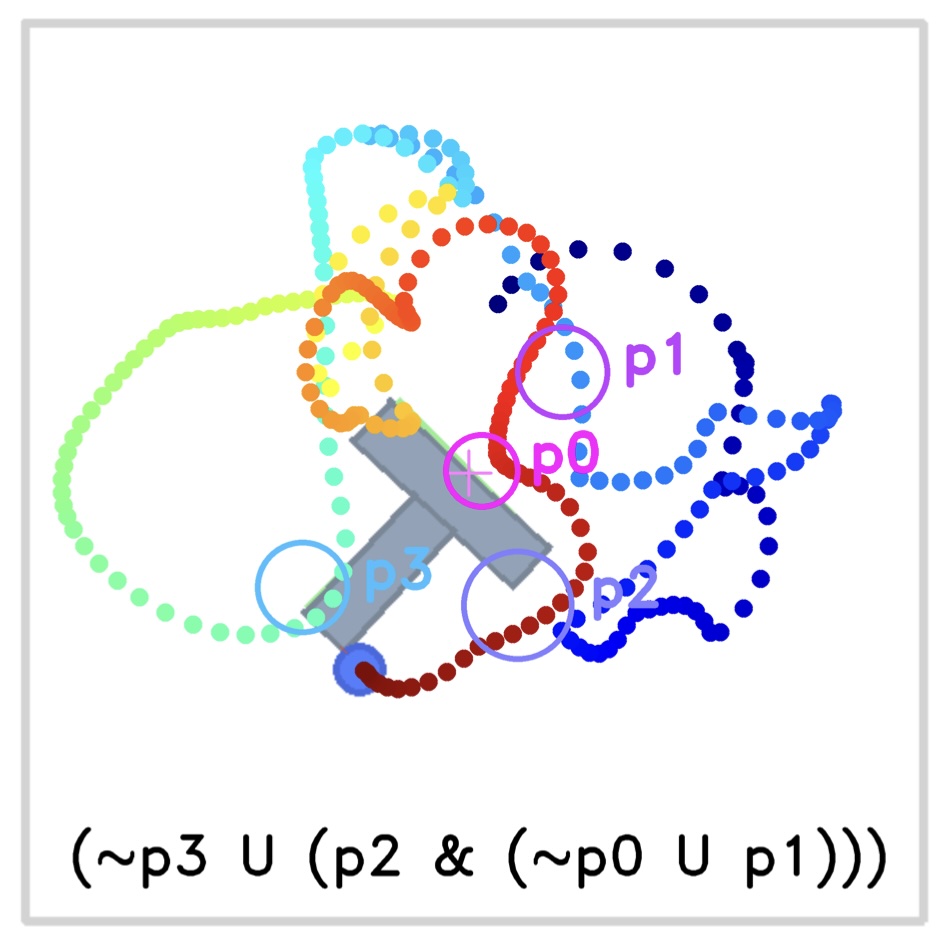

Sequences and time-series often arise in robot tasks, e.g., in activity recognition and imitation learning. In recent years, deep neural networks (DNNs) have emerged as an effective data-driven methodology for processing sequences given sufficient training data and compute resources. However, when data is limited, simpler models such as logic/rule-based methods work surprisingly well, especially when relevant prior knowledge is applied in their construction. However, unlike DNNs, these “structured” models can be difficult to extend, and do not work well with raw unstructured data. In this work, we seek to learn flexible DNNs, yet leverage prior temporal knowledge when available. Our approach is to embed symbolic knowledge expressed as linear temporal logic (LTL) and use these embeddings to guide the training of deep models. Specifically, we construct semantic-based embeddings of automata generated from LTL formula via a Graph Neural Network. Experiments show that these learnt embeddings can lead to improvements on downstream robot tasks such as sequential action recognition and imitation learning.

Resources

You can find our paper here. Check out our repository here on github

Citation

Please consider citing our paper if you build upon our results and ideas.

Yaqi Xie ★, Fan Zhou ★, and Harold Soh ★, “Embedding Symbolic Temporal Knowledge into Deep Sequential Models”, IEEE International Conference on Robotics and Automation (ICRA)

@inproceedings{xie20templogic, title={Embedding Symbolic Temporal Knowledge into Deep Sequential Models}, author={Yaqi Xie and Fan Zhou and Harold Soh}, year={2021}, booktitle={IEEE International Conference on Robotics and Automation (ICRA)}}

Contact

If you have questions or comments, please contact Yaqi.