In recent years, deep neural networks (DNNs) have emerged as an effective data-driven methodology for both i.i.d. and sequential data. However, when data or computation resource is limited, simpler models such as logic/rule-based methods work surprisingly well, especially when relevant prior knowledge is applied in their construction. Unlike DNNs, these “structured” models can be difficult to extend, and do not work well with raw unstructured data.

In sharp contrast to connectionist NN structures, logical formulae are explainable, compositional, and can be explicitly derived from human knowledge. Therefore, we aim to leverage prior symbolic knowledge to improve the performance of deep models.

In two recent papers, we propose to view symbolic knowledge as another form of data. The big question is: how can represent and train models to be consistent with this data?

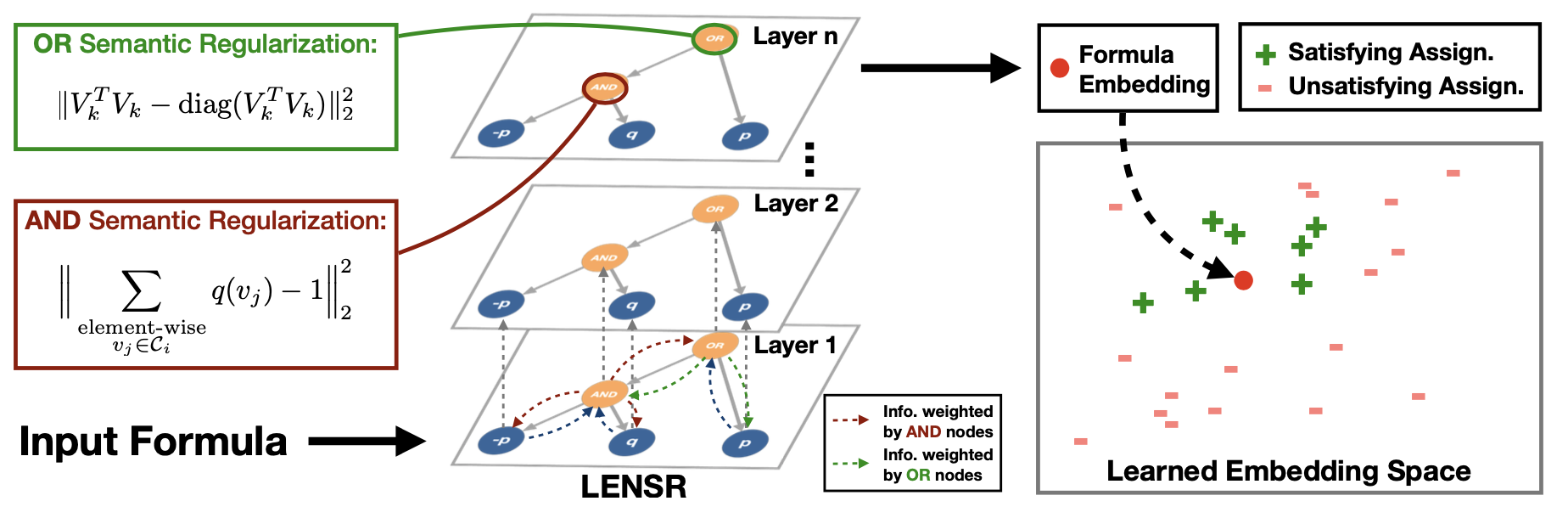

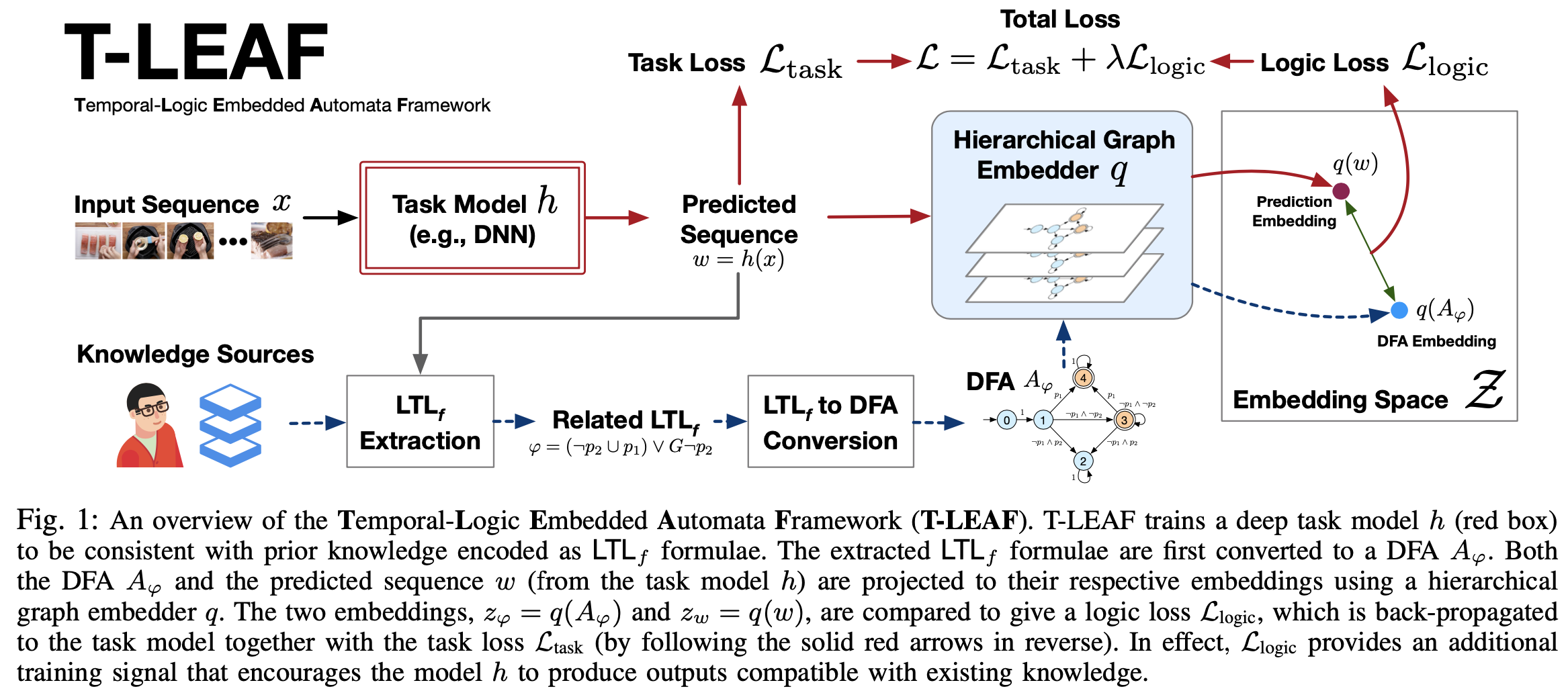

We propose graph embedding network frameworks that projects logic formulae (and assignments) onto a manifold via an augmented Graph Neural Network (GNN). These embeddings can then be used in a logic loss that can be used to guide deep models during training. Two specific frameworks utilizing this approach are LENSR (for propositional logic) and T-LEAF (for finite linear temporal logic). Experiments show that both LENSR and T-LEAF improve the performance of deep models. For more details, check out our LENSR paper (NeurIPS 2019) and our T-LEAF paper (ICRA 2021).

LENSR: Embedding Symbolic Knowledge into Deep Networks

At its heart, Logic Embedding Network with Semantic Regularization (LENSR) is a graph embedding framework; it projects logic formulae (and assignments) onto a manifold via an augmented Graph Neural Network (GNN).

In LENSR, we focus on propositional logic, where a proposition p is a statement which is either True or False. A formula F is a compound of propositions connected by logical connectives, e.g.¬, ∧, ∨, ⇒. An assignment τ is a function which maps propositions to True or False. An assignment that makes a formula F True is said to satisfy F.

Here is an illustration of our LENSR framework.

T-LEAF: Embedding Symbolic Temporal Knowledge into Deep Sequential Models

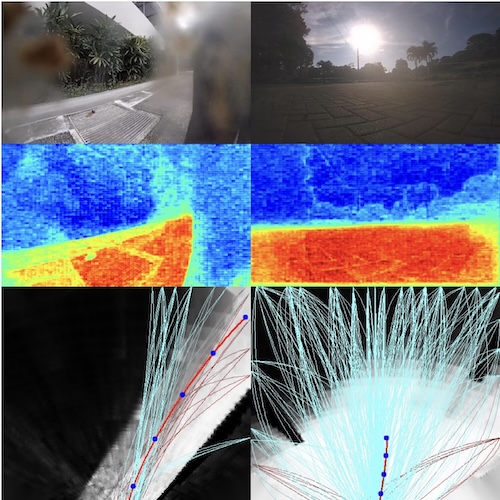

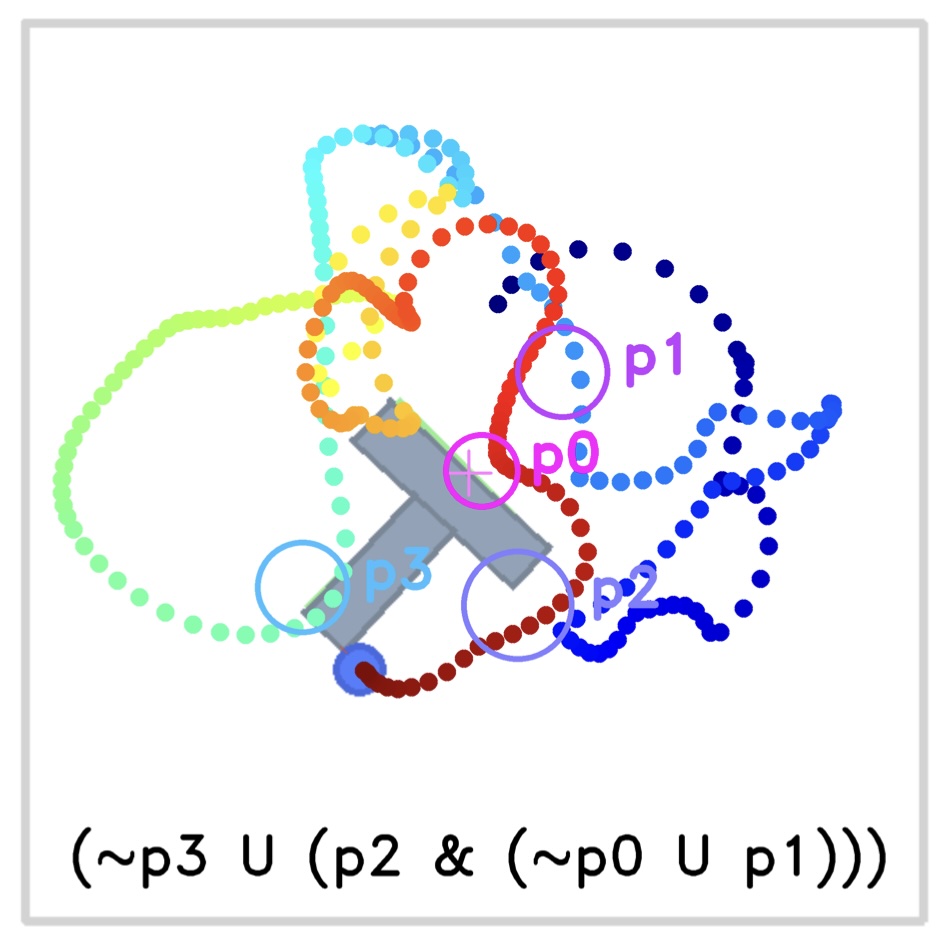

Then we further extend our framework to incorporate temporal symbolic knowledge expressed as linear temporal logic (LTL) and use these embeddings to guide the training of deep sequential models.

Linear Temporal Logic (LTL) is a propositional modal logic often used to express temporally extended constraints over state trajectories. In this work, we use LTL interpreted over finite traces, which has a natural and intuitive syntax. As a formal language, it has well-defined semantics and thus is unambiguously interpretable, which is an advantage over using natural language directly as auxiliary information.

Specifically, our proposed Temporal-Logic Embedded Automata Framework (T-LEAF) constructs semantic-based embeddings of automata generated from LTL formula via a Graph Neural Network.

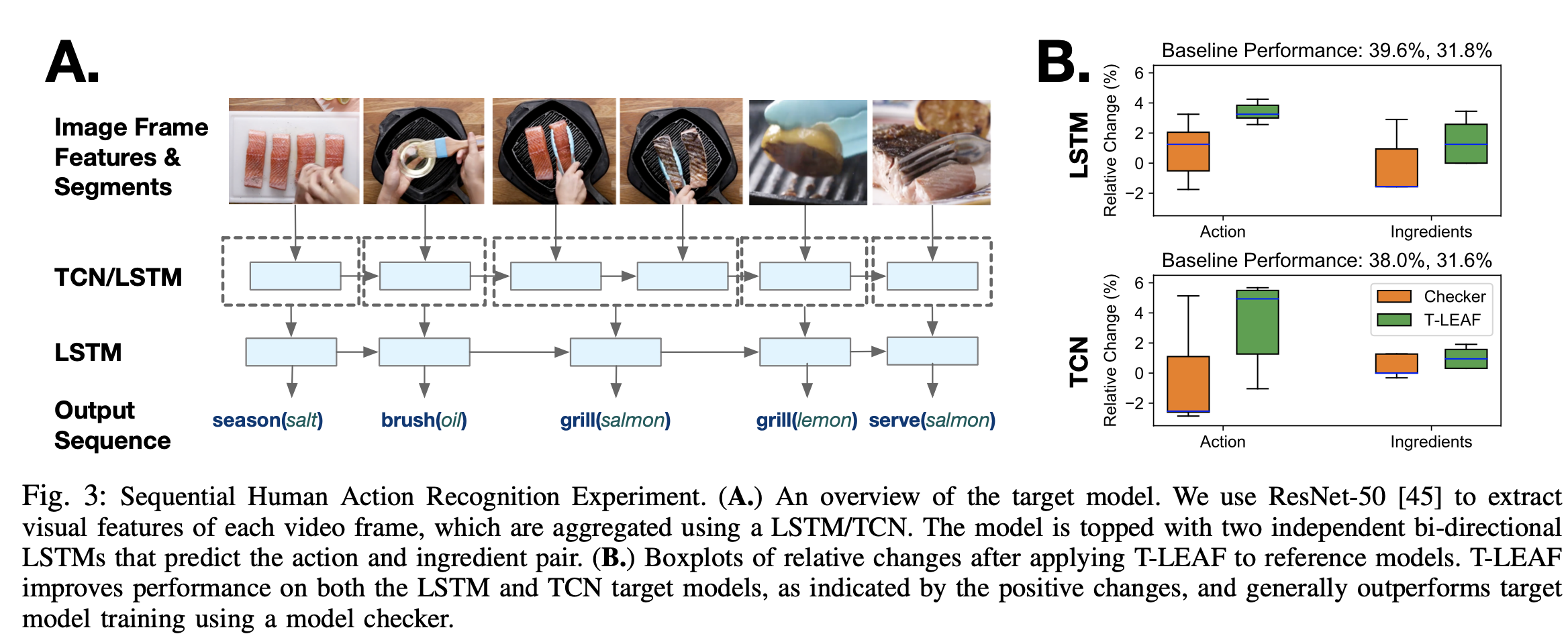

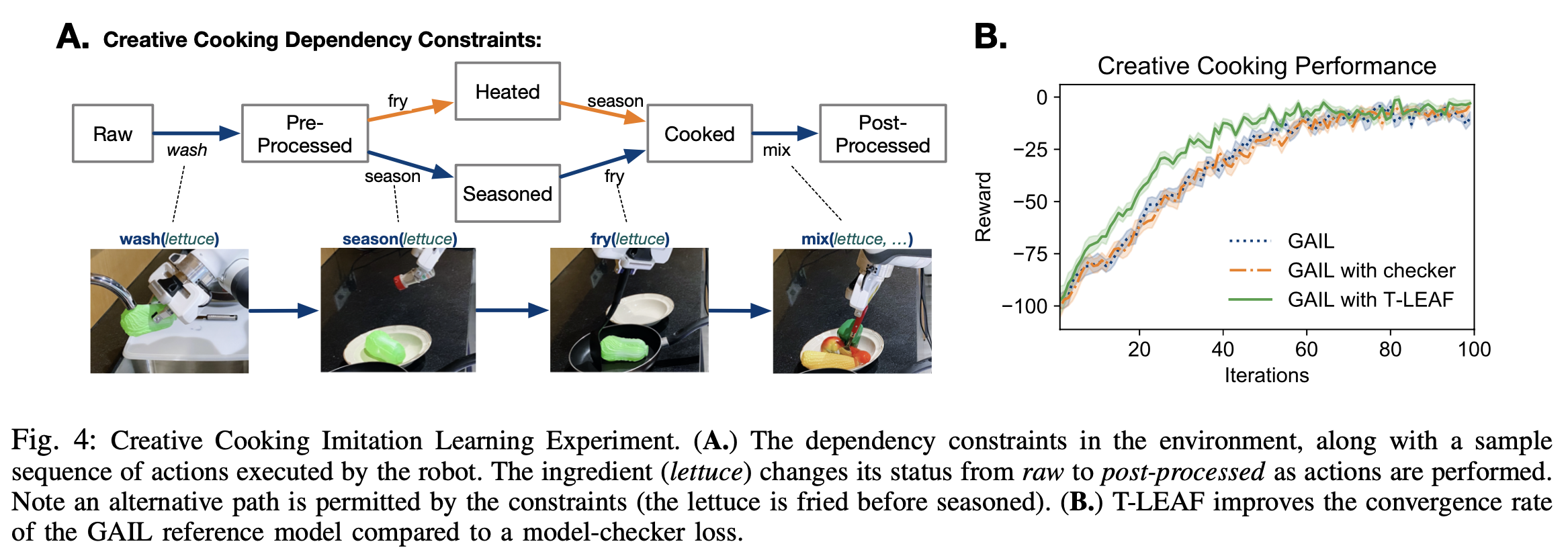

Experiments show that these learnt embeddings can lead to improvements on downstream robot tasks such as sequential human action recognition and imitation learning for creative cooking.

Towards the future

Our experiment show that both LENSR and T-LEAF can enhance deep neural network performance. Moving forward, we aim to explore how other types of prior (symbolic) knowledge (e.g., domain knowledge and common sense) can be used to improve the properties of flexible data-driven models (beyond just performance).

Resources

- Our LENSR paper

- The poster of LENSR paper

- The code associated with LENSR paper

- Our T-LEAF paper

Citation

Consider citing our paper if you build upon our results and ideas.

Y. Xie, Z. Xu, K. S. Meel, M. S. Kankanhalli, and H. Soh, “Embedding symbolic knowledge into deep networks,” in NeurIPS, 2019.

@inproceedings{Xie2019,

author = {Xie, Yaqi and Xu, Ziwei and Kankanhalli, Mohan S and Meel, Kuldeep S and Soh, Harold},

booktitle = {Advances in Neural Information Processing Systems},

editor = {H. Wallach and H. Larochelle and A. Beygelzimer and F. d\textquotesingle Alch\'{e}-Buc and E. Fox and R. Garnett},

publisher = {Curran Associates, Inc.},

title = {Embedding Symbolic Knowledge into Deep Networks},

url = {https://proceedings.neurips.cc/paper/2019/file/7b66b4fd401a271a1c7224027ce111bc-Paper.pdf},

volume = {32},

year = {2019}

}

Y. Xie, F. Zhou and H. Soh, “Embedding Symbolic Temporal Knowledge into Deep Sequential Models”, in ICRA, 2021.

@inproceedings{Xie2021,

title={Embedding Symbolic Temporal Knowledge into Deep Sequential Models},

author={Yaqi Xie and Fan Zhou and Harold Soh},

year={2021},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

publisher = {IEEE}

}

Contact

If you have questions or comments, please contact Yaqi Xie.

Acknowledgements

This work was supported in part by a MOE Tier 1 Grant to Harold Soh and by the National Research Foundation Singapore under its AI Singapore Programme [AISG-RP-2018-005]. It was also supported by the National Research Foundation, Prime Minister’s Office, Singapore under its Strategic Capability Research Centres Funding Initiative.