Juiced and Ready to Predict Private Information in Deep Cooperative Reinforcement Learning, Eugene Lim★, Bing Cai Kok★, Songli Wang★, Joshua Lee★, Harold Soh★, ACM/IEEE International Conference on Human-Robot Interaction (HRI)

Links:

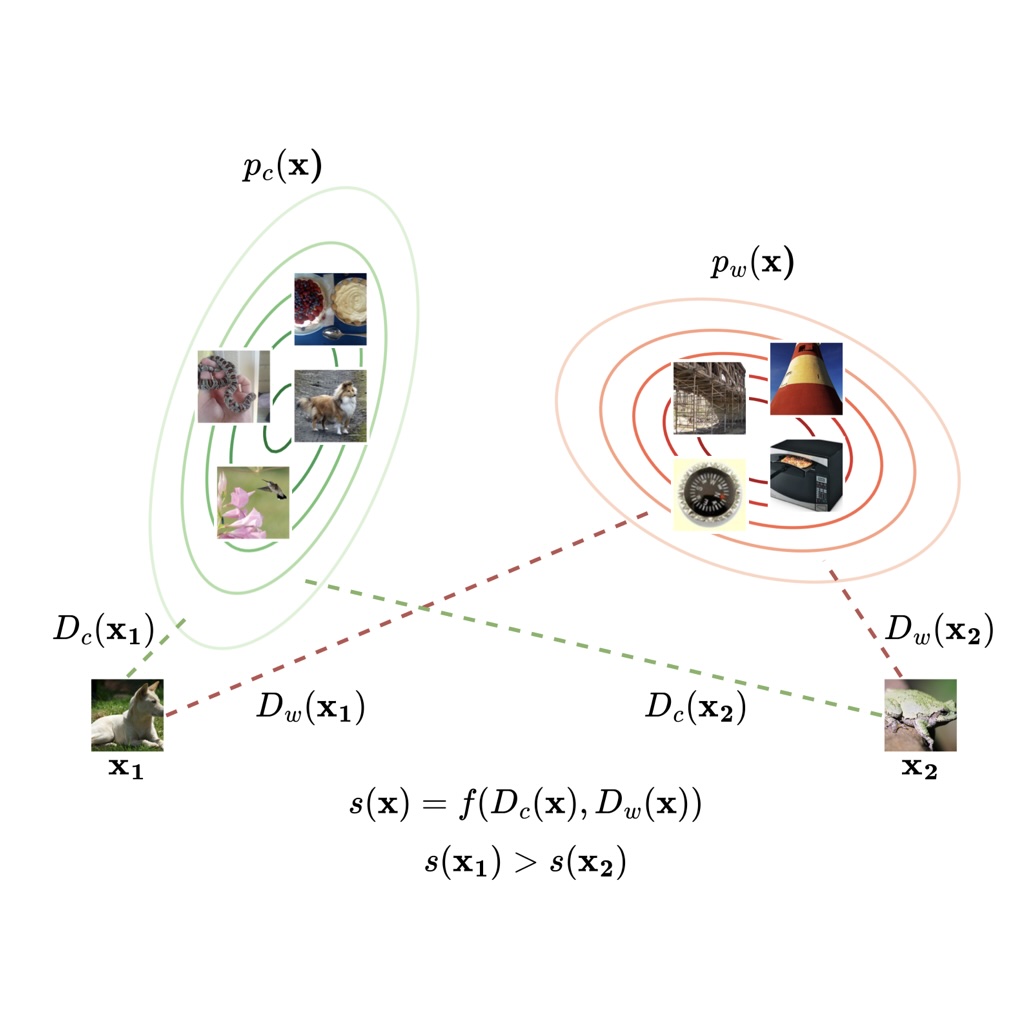

In human-robot collaboration settings, each agent often has access to private information (PI) that is unavailable to others. Examples include task preferences, objectives, and beliefs. Here, we focus on the human-robot dyadic scenarios where the human has private information, but is unable to directly convey it to the robot. We present Q-Network with Private Information and Cooperation (Q-PICo), a method for training robots that can interactively assist humans with PI. In contrast to existing approaches, we explicitly model PI prediction, leading to a more interpretable network architecture. We also contribute Juiced, an environment inspired by the popular video gameOvercooked, to test Q-PICo and other related methods for human-robot collaboration. Our initial experiments in Juiced show that the agents trained with Q-PICo can accurately predict PI and exhibit collaborative behavior.

Resources

You can find our paper here. Check out our repository here on github

Citation

Please consider citing our paper if you build upon our results and ideas.

Eugene Lim★, Bing Cai Kok★, Songli Wang★, Joshua Lee★, Harold Soh★, “Juiced and Ready to Predict Private Information in Deep Cooperative Reinforcement Learning”, ACM/IEEE International Conference on Human-Robot Interaction (HRI)

@article{lim2020juiced,

title={Juiced and Ready to Predict Private Information in Deep Cooperative Reinforcement Learning},

author={Lim, Eugene and Kok, Bing Cai and Wang, Songli and Lee, Joshua and Soh, Harold},

journal={ACM/IEEE International Conference on Human-Robot Interaction (HRI)},

pages = {343–345},

year = {2020},

doi = {10.1145/3371382.3378308},

publisher={Association for Computing Machinery}}

Contact

If you have questions or comments, please contact Eugene Lim.

Acknowledgements

This work is supported by the National Research Foundation Singapore under Grant No.: AISG-RP-2019-011.