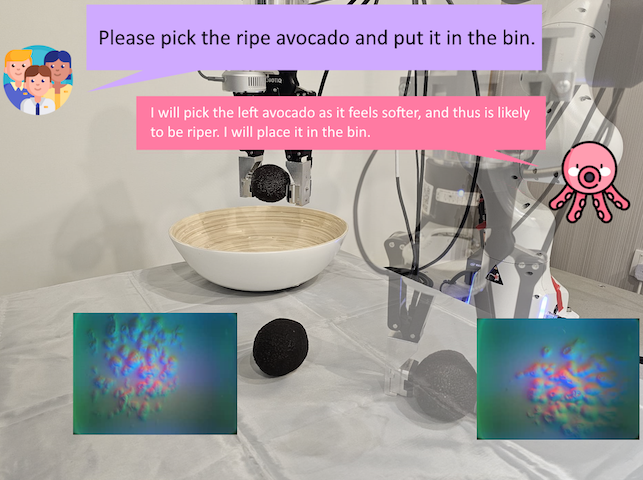

Know When to Abstain: Optimal Selective Classification with Likelihood Ratios

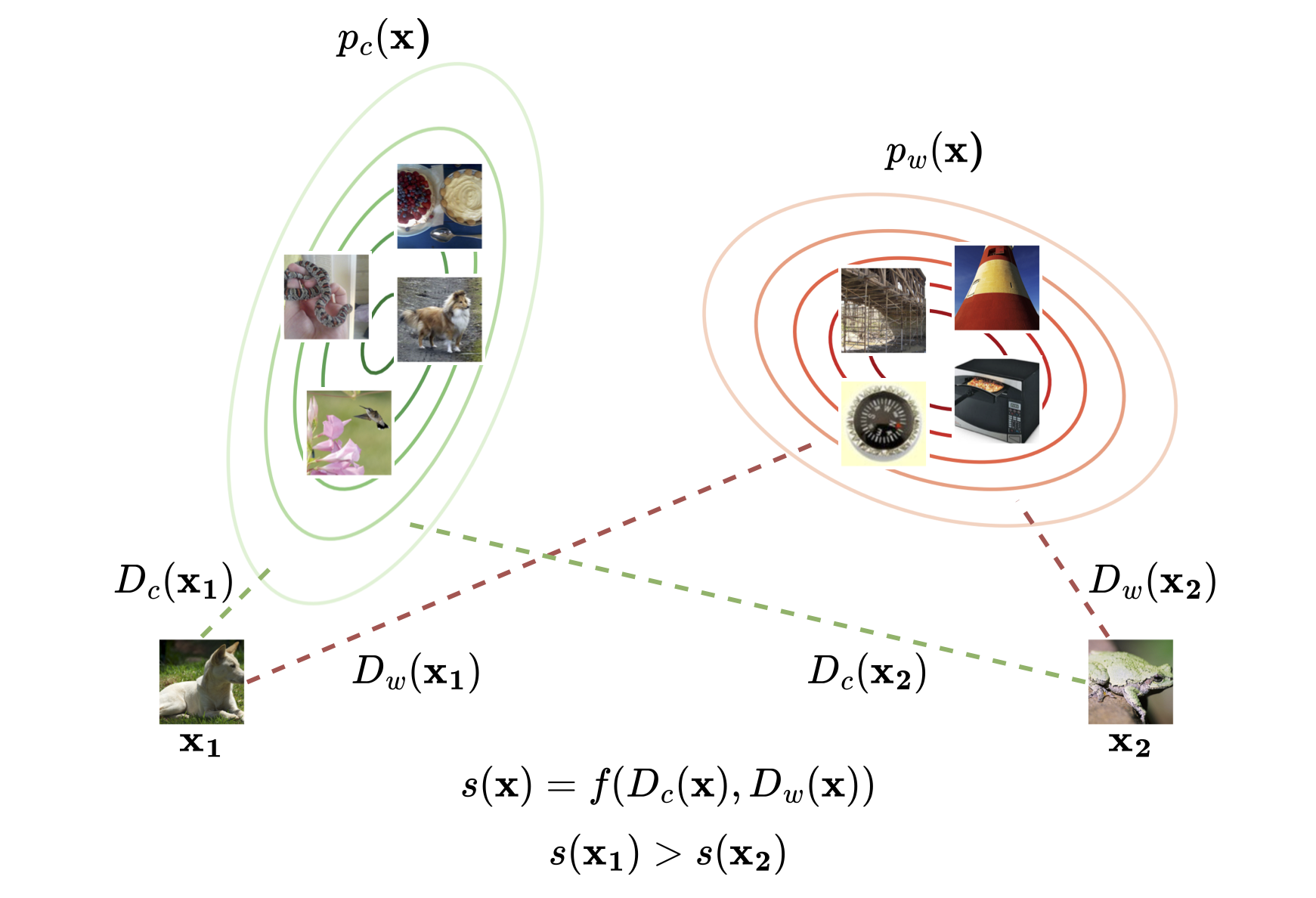

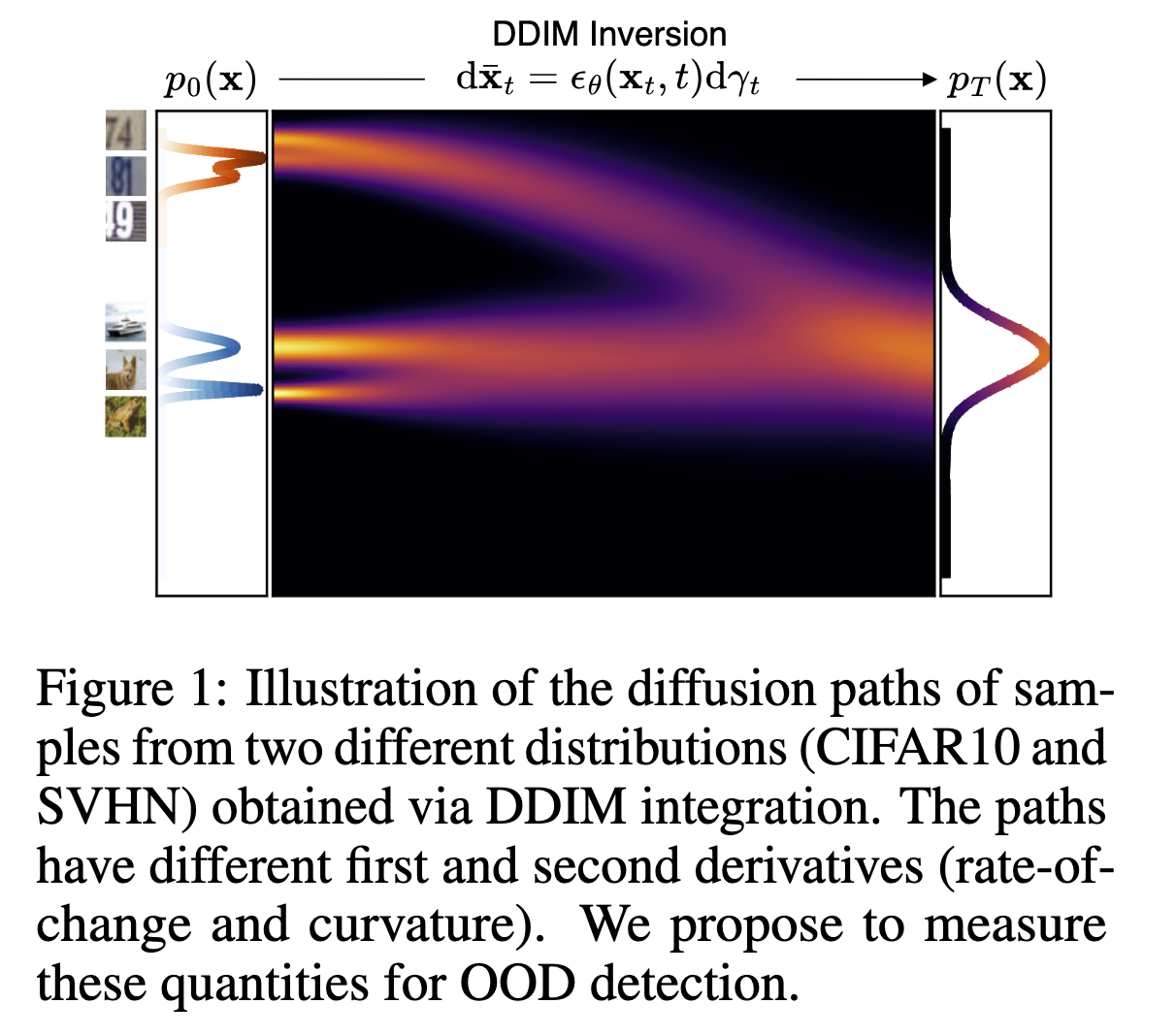

We propose optimal likelihood ratio-based selective classification methods based on the Neyman-Pearson lemma and evaluate them under vision and language covariate shifts tasks.